OpenAI Launches Open-Source Models, Tops Hugging Face Leaderboard

The flagship gpt-oss-120b targets high-performance desktops and servers, requiring 60 GB of VRAM and multiple GPUs.

OpenAI has unveiled two new open-source language models—gpt-oss-120b and gpt-oss-20b—that are rapidly gaining traction on the Hugging Face leaderboard. These models are designed to rival top-tier proprietary models in performance, while offering full open-source accessibility under the permissive Apache 2.0 license.

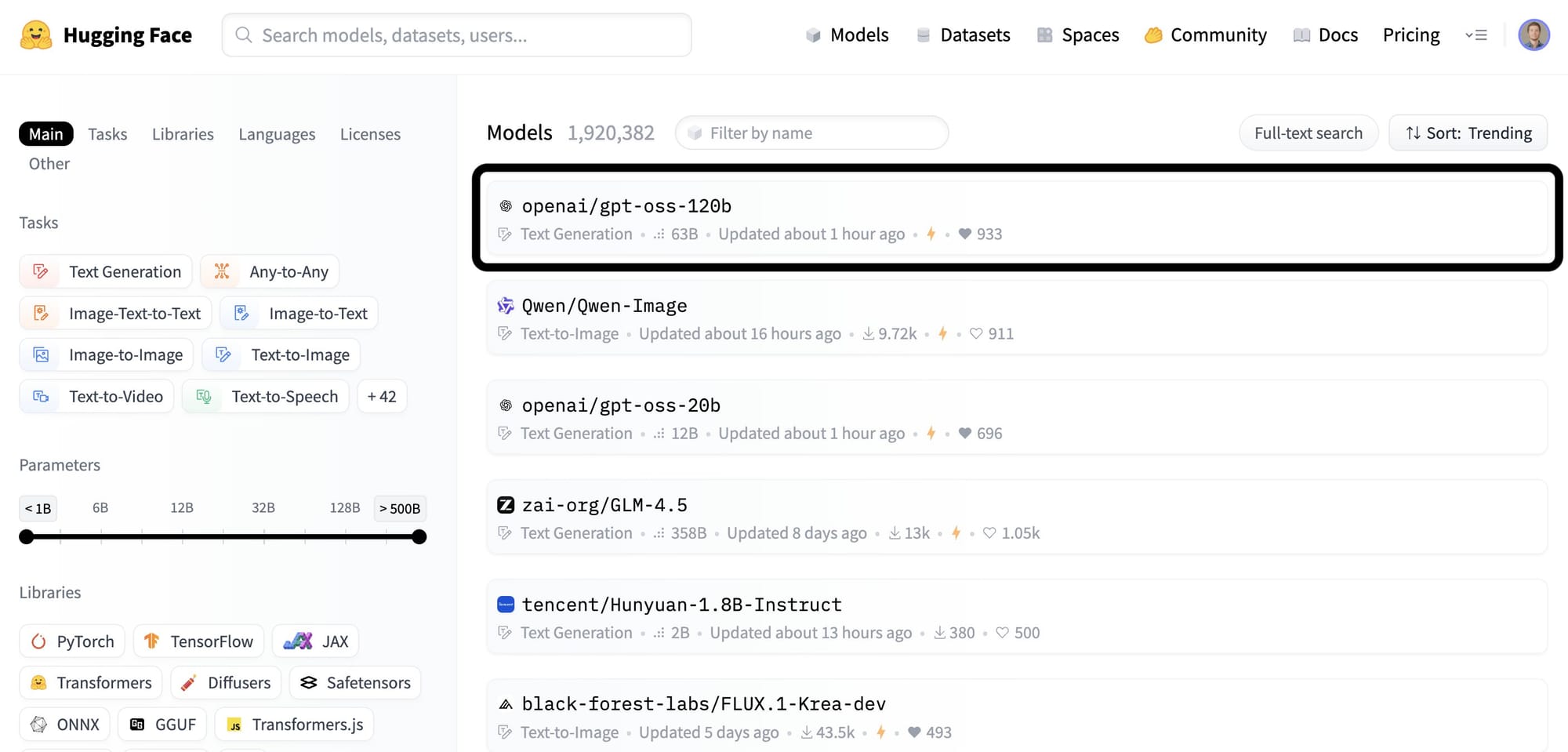

Within hours of launch, the model climbed to the number one spot on Hugging Face, CEO Clem Delangue revealed. He said," And just like that, OpenAI gpt-oss is now the number one trending model on Hugging Face, out of almost 2 million open models."

The flagship gpt-oss-120b targets high-performance desktops and servers, requiring 60 GB of VRAM and multiple GPUs. It achieves near-parity with OpenAI's o4-mini on core reasoning benchmarks while running efficiently on a single 80 GB GPU. Meanwhile, the compact gpt-oss-20b is optimised for laptops and edge devices with just 16 GB of memory, delivering performance close to OpenAI’s o3-mini.

Both models are available for download on Hugging Face and GitHub, and are compatible with macOS (Big Sur or later), Linux (Ubuntu 18.04 or later), and even Windows via WSL 2.0 on powerful systems.

gpt-oss is out!

— Sam Altman (@sama) August 5, 2025

we made an open model that performs at the level of o4-mini and runs on a high-end laptop (WTF!!)

(and a smaller one that runs on a phone).

super proud of the team; big triumph of technology.

Built using a mixture-of-experts (MoE) architecture, the models balance robust reasoning, tool use, and efficiency. They support code execution and even incorporate web search into their thought processes, making them particularly useful for developers, writers, and researchers.

"People sometimes forget that they've already transformed the field: GPT-2, released back in 2019, is Hugging Face's most downloaded text-generation model ever, and Whisper has consistently ranked in the top 5 audio models. Now that they are doubling down on openness, they may completely transform the AI ecosystem, again," Delangue added.