Microsoft Unveils New AI Inference Accelerator Maia 200 to Boost Cloud AI Performance

Microsoft claims the accelerator offers up to 30 percent better performance per dollar than its current hardware.

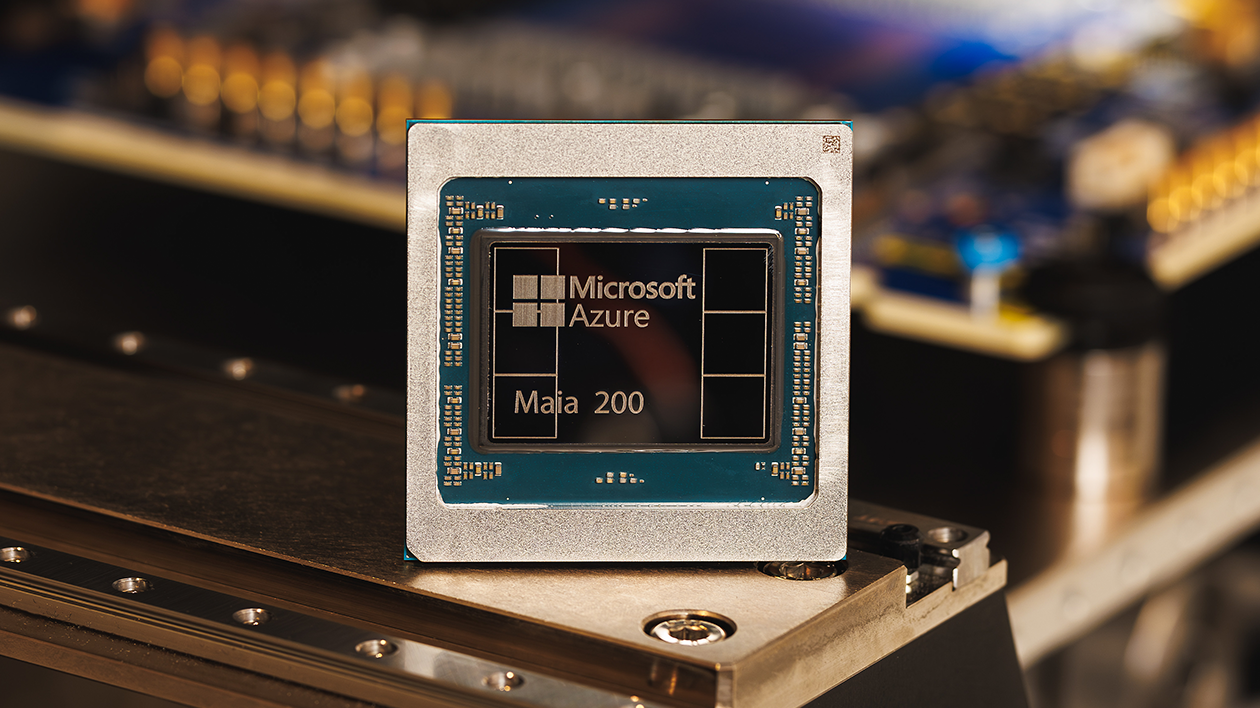

Microsoft has introduced Maia 200, a next-generation AI inference accelerator designed to improve the efficiency and economics of large-scale artificial intelligence workloads.

Maia 200 marks a significant step in Microsoft’s AI infrastructure strategy, focusing specifically on inference — the process of generating responses from AI models in real time.

Built on Taiwan Semiconductor Manufacturing Company’s (TSMC) advanced 3-nanometer process, Maia 200 integrates more than 140 billion transistors and 216 GB of high-bandwidth memory, enabling high-speed data movement crucial for demanding AI tasks.

Microsoft claims the accelerator offers up to 30 percent better performance per dollar than its current hardware and delivers significantly higher performance on low-precision workloads compared with competing cloud AI chips.

Early deployments of Maia 200 are already live in Microsoft Azure’s U.S. Central region and are expected to expand to additional data centres. The chip is engineered to support major AI applications, including large-scale models such as GPT-5.2, Microsoft 365 Copilot and services powered by Microsoft Foundry.

An early-access software development kit (SDK) that includes PyTorch integration and a Triton compiler preview is also being made available to developers to optimise workloads on the platform.

“Maia 200 is part of our heterogeneous AI infrastructure and will serve multiple models,” one Microsoft documentation said, highlighting the accelerator’s role in advancing AI performance and cost-efficiency across the company’s cloud services.