How Google's AI Became a "Pain in the ***" For a Bengaluru Startup

In March, the company saw a tenfold increase in customer care calls

Artificial Intelligence (AI) is meant to transform businesses, change how they operate, supercharge productivity, drive revenue, and growth multiple 'x' times. In some cases, it is doing so. Take NVIDIA, for example, AI helped it become one of the most valuable companies in the world.

However, for some, it has unintentionally become a real pain. For a Bengaluru-based ride sharing startup, Quick Ride, a glitch in Google’s AI-powered search feature led to a major disruption.

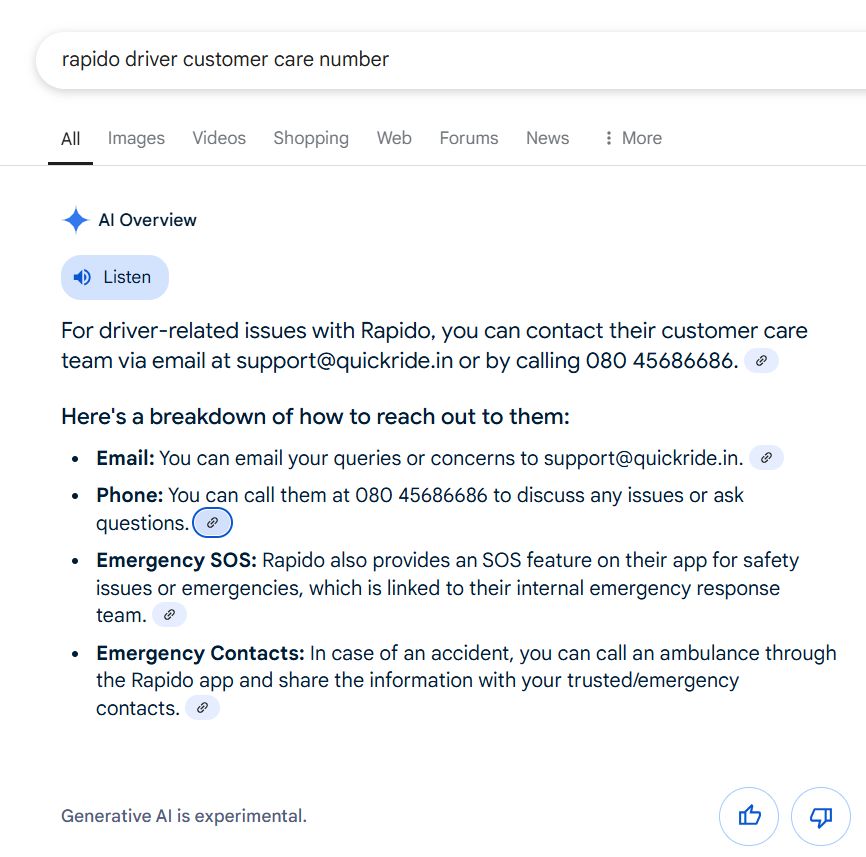

In March, the company saw a tenfold increase in customer care calls. The reason? Google’s AI Overview mistakenly listed its contact details in response to a query for Rapido’s driver support– another ride-hailing startup in the country.

The error overwhelmed Quick Ride’s limited support staff, delaying service for its actual users. Despite reporting the issue to both Google and Rapido, Quick Ride claims there was no resolution for weeks.

The mix-up highlights the risks of hallucinations in AI-generated summaries, particularly when used to convey critical information like contact numbers.

"We had to update our website to include Rapido number to avoid the calls coming to our call centre," a Quick Ride employee said.

While the matter is resolved now, experts note that while AI accuracy is improving, systems remain probabilistic and prone to errors, especially for smaller businesses without direct support from large platforms.

It mirrors broader concerns about AI in search, with other platforms like Perplexity and even Google facing criticism for misinformation, plagiarism, and undermining content creators.

Google's AI Overview, which began rolling out in May 2024, delivers AI-generated summaries at the top of search results. Powered by its Gemini Large Language Model (LLM), the feature generates concise, easy-to-understand responses in natural language.

However, each summary includes a disclaimer noting that “Generative AI is experimental.”

This very hallucinatory nature of LLMs is stopping the widespread adoption of GenAI among enterprises. Recently, OpenAI, the startup developing many of these frontier models, claimed its newer models hallucinate even more, compared to its predecessors.

Another research fromYale University raises critical questions about the feasibility of detecting hallucinations. The researchers introduce a theoretical framework to assess whether LLMs can reliably identify their own inaccuracies without human intervention.

Their findings reveal that if an AI system is trained only on correct data (positive examples), automated hallucination detection becomes fundamentally impossible across most language scenarios.