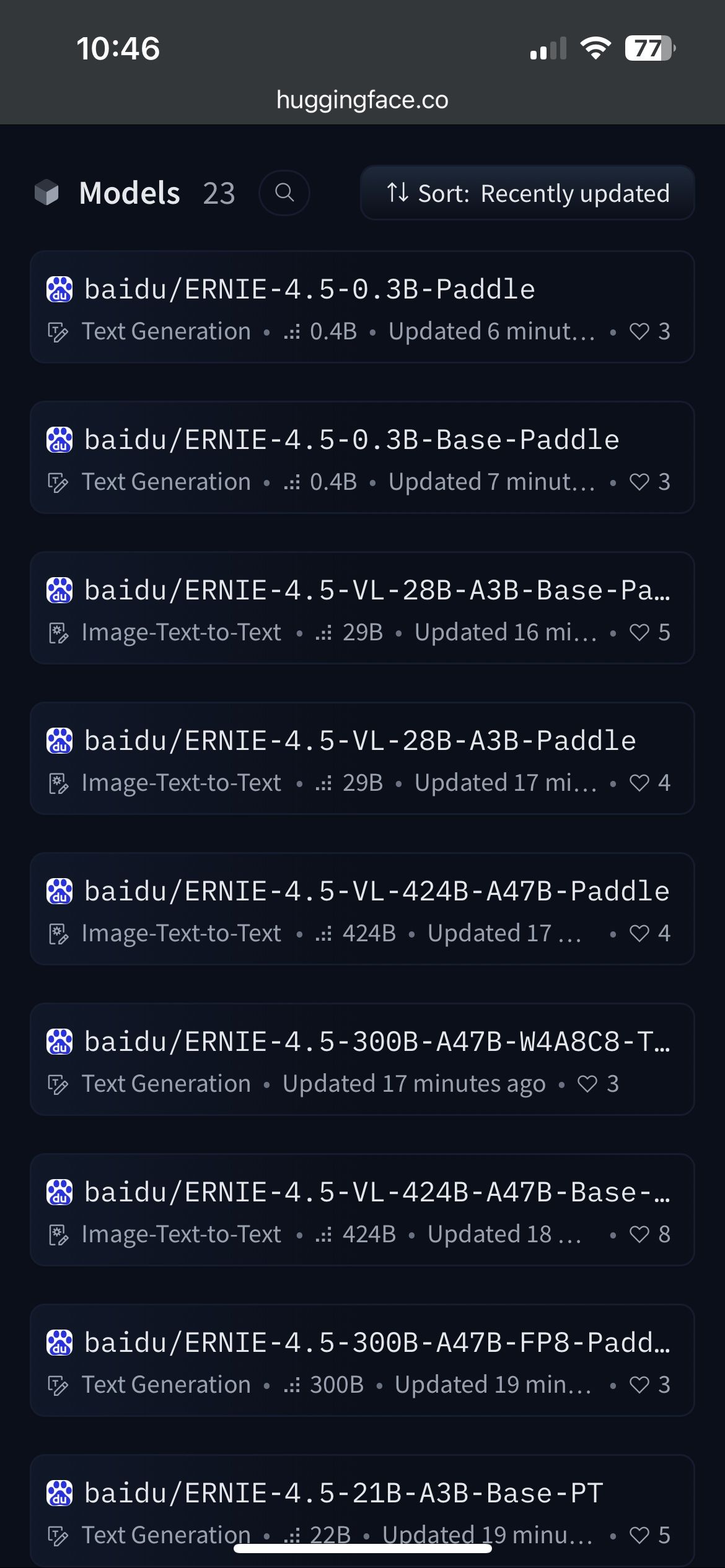

Baidu Drops 23 Open-Source Models on Hugging Face

This release brings Baidu’s ERNIE family into sharp focus, offering developers a versatile, open‑source toolkit for diverse AI tasks.

Chinese tech giant Baidu has simultaneously released 23 new ERNIE 4.5 foundation models on Hugging Face, spanning a diverse range of sizes—from a compact 0.3 billion parameters to a colossal 424 billion—each boasting a 128K token context window and Apache 2.0 licensing.

The lineup includes dense and Mixture‑of‑Experts (MoE) variants, covering text‑only and multimodal (text + vision) capabilities.

Enthusiasts on Reddit applauded the move, noting the inclusion of base models alongside powerful large‑scale versions:

“It’s refreshing to see that they include base models as well,” one user remarked.

Another user highlighted the impact of multimodal support, saying the 28B variant’s vision features “cool to have”.

This release brings Baidu’s ERNIE family into sharp focus, offering developers a versatile, open‑source toolkit for diverse AI tasks.

ERNIE’s evolution—demonstrated by Ernie Bot’s 300 million users and ERNIE 4.5 outperforming GPT‑4.5 on benchmarks underscores Baidu’s growing ambition in global AI.

By open‑sourcing a wide model range, Baidu aims to appeal to both research labs and developers seeking scalable, multimodal AI tools.